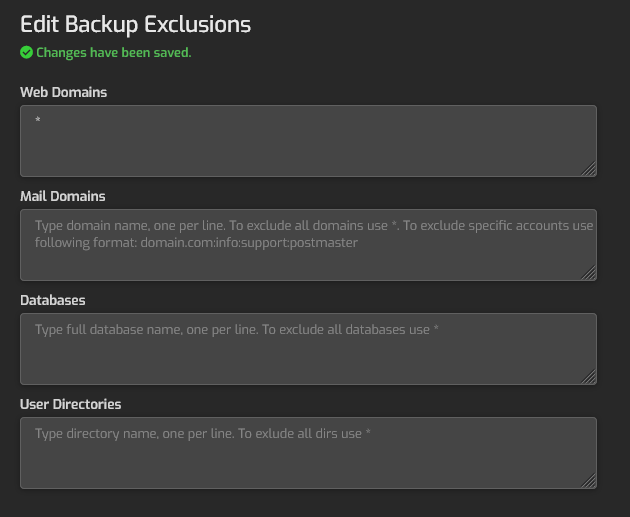

Ok, so this may sound a bit weird! Currently I have my backups set with the following exclusions:

This works fine in the extent that it excludes the web/* domains. The problem I have, is that I still want the domains re-created with the SSL, configs etc, when doing a restore. Is there a way to do this? I tried doing a rule such as:

*/public_html/*

or

public_html/*

But they just come up with an error about not having enough disk space.

Is there an alternative method I could maybe do, that would backup the user + configs, without all the actual files in public_html (100gb+ worth, which we already backup via another method)

Thanks!

Andy

Thanks for the quick reply. Unfortunatly not:

v-backup-user chambres

Current space on the drive:

df -h ./

The actual “chambres” user account is:

/home/chambres/web# du -sh ./

109G ./

The DBs are quite large too (maybe a couple of Gb)

Cheers

Andy

1 Like

Looking at the code, I’m not sure there is a way to do it currently?

# Backup files

if [ "$BACKUP_MODE" = 'zstd' ]; then

tar "${fargs[@]}" -cpf- * | pzstd -"$BACKUP_GZIP" - > $tmpdir/web/$domain/domain_data.tar.zst

else

tar "${fargs[@]}" -cpf- * | gzip -"$BACKUP_GZIP" - > $tmpdir/web/$domain/domain_data.tar.gz

fi

I hacked mine by adding in exclude:

# Backup files

if [ "$BACKUP_MODE" = 'zstd' ]; then

tar "${fargs[@]}" --exclude=public_html -cpf- * | pzstd -"$BACKUP_GZIP" - > $tmpdir/web/$domain/domain_data.tar.zst

else

tar "${fargs[@]}" --exclude=public_html -cpf- * | gzip -"$BACKUP_GZIP" - > $tmpdir/web/$domain/domain_data.tar.gz

fi

That seems to do the trick, but will get lost on any update. I’m just trying to decide if there is a better way to do this that is future proof

UPDATE: Oh actually, you do have fargs … I wonder why thats not working

Cheers

Andy

eris

January 25, 2023, 2:47pm

5

It is working with:domain.com :public_html

I do the same for my own server…

Ok so doing some more testing. If I exclude:

domain.org:public_html

That works fine. Printing out fargs I get:

--exclude=./logs/* --exclude=public_html/*

So the issue seems to be around the * syntax (for all domains). I’ll do some digging to see if I can find a workaround.

eris

January 25, 2023, 2:50pm

7

user_exclusions=$(grep 'USER=' $USER_DATA/backup-excludes.conf \

| awk -F "USER='" '{print $2}' | cut -f 1 -d \')

fi

if [ -f "$USER_DATA/web.conf" ] && [ "$web_exclusions" != '*' ]; then

usage=0

domains=$(grep 'DOMAIN=' $USER_DATA/web.conf \

| awk -F "DOMAIN='" '{print $2}' | cut -f 1 -d \')

for domain in $domains; do

exclusion=$(echo -e "$web_exclusions" | tr ',' '\n' | grep "^$domain$")

if [ -z "$exclusion" ]; then

# Defining home directory

home_dir="$HOMEDIR/$user/web/$domain/"

exlusion=$(echo -e "$web_exclusions" | tr ',' '\n' | grep "^$domain:")

fargs=()

if [ -n "$exlusion" ]; then

xdirs=$(echo -e "$exlusion" | tr ':' '\n' | grep -v "$domain")

for xpath in $xdirs; do

fargs+=(--exclude="$xpath")

Looks like it sorts that part out

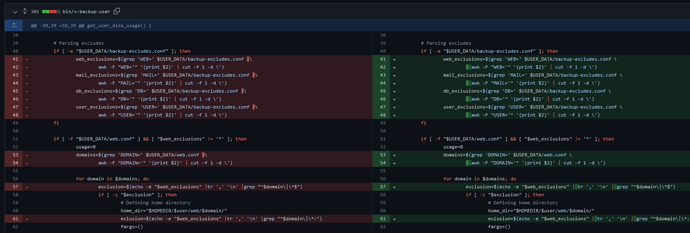

Yeah. The problem seems to be with:

exlusion=$(echo -e "$WEB" |tr ',' '\n' |grep "^$domain:")

Its looking for $domain - which is fine if you put the domain. But its missing the * wildcard for all. I’ve tried playing with:

exlusion=$(echo -e "$WEB" |tr ',' '\n' |grep "^$domain|\*:")

But that doesn’t work

eris

January 25, 2023, 3:03pm

9

We need to include in the check if * is used we should select the domain always…

Yeah. It works if we do:echo -e "*:public_html" |tr ',' '\n' |grep "^\*:"

But not with the |:

echo -e "*:public_html" |tr ',' '\n' |grep "^foo.com|\*:"

Do I need to escape something? I’ve tried wrapping it:

echo -e "*:public_html" |tr ',' '\n' |grep "^(foo.com|\*):"

But still no joy

UPDATE: I got it

exlusion=$(echo -e “$WEB” |tr ‘,’ ‘\n’ |grep “^$domain|*:”)

Looks like I needed to escape | as well. I’ll see if I can create a PR for it for review (just trying to remember how I did the fork last time ;))

Cheers

Andy

1 Like

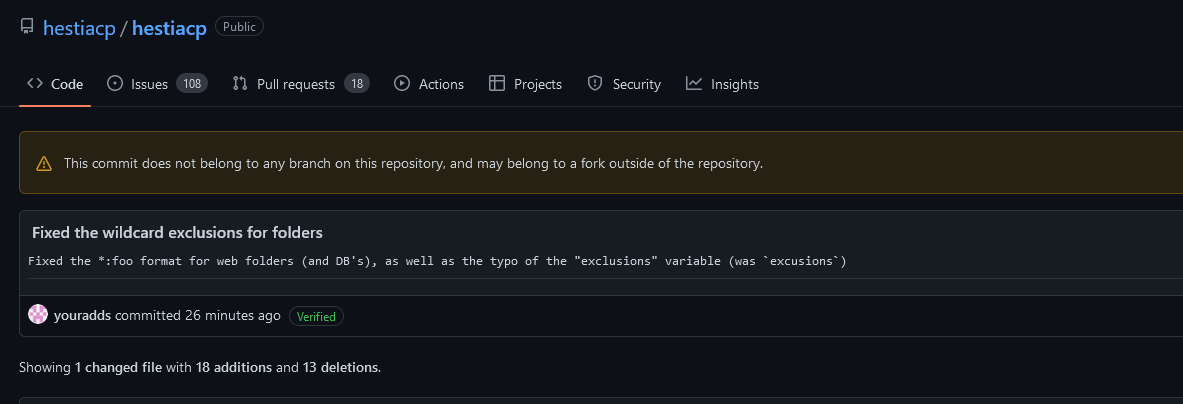

PR submitted

The problem still exists around the “not enough disk space”, so I have to comment that out. I’m not sure what a better solution would be.

1 Like

Looking at it - there are more places that could do with this tweak as well. This also fixed the disk space calculations as well (as its correctly ignoring public_html when working out the required space). I just did a tweak to the file, but my editor changed all of the tabs to spaces! Now I’ve fixed that but I’m trying to work out how to edit my file before submitting that

Hmmnm I can’t figure it out. There are weird things going on when I compare:

You can see my modified file here:

I’m not sure where its getting the |\ stuff in the file comparison?

Ok so it got late last night, so picking up again today. It looks like something didn’t quite happen right:

v-restore-user chambres chambres.2023-01-26_05-16-01.tar

-- WEB --

**ALL MISSING HERE**

-- MAIL --

2023-01-26 06:39:22 cdn-org.e-reserve.org

2023-01-26 06:39:23 chambresdhotes.org

2023-01-26 06:39:55 resa.chambresdhotes.org

-- DB --

2023-01-26 06:39:57 chambres_community

/backup/tmp.zVr79uU6OC/db/chambres_community/chambres_community.mysql.sql.zst: 4 52828034 bytes

2023-01-26 06:40:14 chambres_links

/backup/tmp.zVr79uU6OC/db/chambres_links/chambres_links.mysql.sql.zst: 5884334498 bytes

-- CRON --

2023-01-26 06:51:09 0 cron jobs

-- USER FILES --

2023-01-26 06:51:09 fix_property_types.log

2023-01-26 06:51:09 .npm

2023-01-26 06:51:09 move-mobi-to-https

2023-01-26 06:51:09 .vscode-server

2023-01-26 06:51:09 .wget-hsts

2023-01-26 06:51:09 make-new-villages-live.cgi

2023-01-26 06:51:09 .bash_history

2023-01-26 06:51:09 category_structure.log

2023-01-26 06:51:09 .ssh

2023-01-26 06:51:10 remote-backup.sh

2023-01-26 06:51:10 .bashrc

2023-01-26 06:51:10 closest_towns.log

2023-01-26 06:51:10 update_distances.log

2023-01-26 06:51:10 .profile

2023-01-26 06:51:10 modules.cgi

2023-01-26 06:51:10 build-changed.sh

2023-01-26 06:51:10 .composer

2023-01-26 06:51:10 .local

2023-01-26 06:51:10 description_urls.log

2023-01-26 06:51:10 remote-backup.sh.bak

2023-01-26 06:51:10 export-local-csv-files.sh

2023-01-26 06:51:10 test.cgi

2023-01-26 06:51:10 copy-site.sh

2023-01-26 06:51:10 symlinks.txt

2023-01-26 06:51:11 build-all.sh

2023-01-26 06:51:11 counters.log

2023-01-26 06:51:11 .cache

2023-01-26 06:51:11 nohup.out

2023-01-26 06:51:11 learn-spam.sh

2023-01-26 06:51:11 update_search_sorts.log

2023-01-26 06:51:11 .config

2023-01-26 06:51:11 dbs

2023-01-26 06:51:32 backup

2023-01-26 06:51:32 .bash_logout

I’m just having a look to see if I can work it out. Maybe something needs tweaking in v-restore-user, to the same effect of what I changed in v-backup-user? (i.e around the * )?

Interestingly, actually, it looks like it DID work… but the bit missing is in the /conf folder?

I found the problem - but I can’t figure out how to get around it. Basically, we have:

# Define exclude arguments

exlusion=$(echo -e "$WEB" | tr ',' '\n' | grep "^$domain\|\*:")

set -f

fargs=()

fargs+=(--exclude='./logs/*')

if [ -n "$exlusion" ]; then

xdirs="$(echo -e "$exlusion" | tr ':' '\n' | grep -v $domain)"

for xpath in $xdirs; do

if [ -d "$xpath" ]; then

fargs+=(--exclude=$xpath/*)

echo "$(date "+%F %T") excluding directory $xpath"

msg="$msg\n$(date "+%F %T") excluding directory $xpath"

else

echo "$(date "+%F %T") excluding file $xpath"

msg="$msg\n$(date "+%F %T") excluding file $xpath"

fargs+=(--exclude=$xpath)

fi

done

fi

set +f

In my case, $exclusion is: *:public_html

What I guess it needs to do, is replace * with $domain if it exists in the string. Doing a test this works:

sed "s/\\*/foo/g" <<< *:public_html

foo:public_html

But in the bash script it doesn’t:

sed "s/\\*/$domain/g" <<< $exlusion

I’ve tried * , just *, etc. I’ve even tried it as:

exclusion="${exlusion//\\*/$domain}"

Yet it remains as *:public_html.

Any pointers? As mentioned before, bash isn’t my programming language

Ok I got it!

if [[ "$exclusion" =~ '*' ]]; then

exclusion="${exclusion/\*/$domain}"

fi

I’m still playing with it to see if this actually works this time (its a 5gb backup, so takes a while to download from the live server, put up on the new one, and try a restore :)) (there is also a typo in that script - excusion should be exclusion - which is where part of my problem came from and I was doing the replacement on $exc lusion

I’ve cancelled the old branch (to get rid of all the weirdness that happened yesterday), and just created a new one with the correct changes. It all looks in order now

Although, I’m not sure if this is normal for a submission?