Hey guys, I recently saw this post on Hestia Forums and everyone was saying they don’t have a clear answer if OpenLitespeed actually helps handle load better. So I thought to run some tests myself.

Server Specs:

I ran the test setup on Oracle Always Free tier with max configurations so it’s easy to replicate.

Ampere 4vCPU core

24GB RAM

200GB Disk Space

Ubuntu 22.04

The tests were run on a Clean install of Wordpress on the default 2024 homepage.

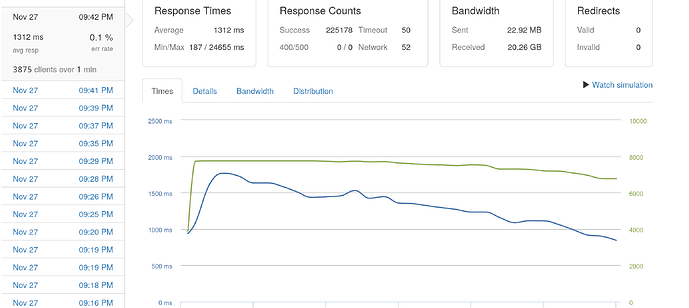

Cyberpanel Test

On First test, I installed Cyberpanel with OpenLitespeed. Installed a Website, Installed WordPress and ran three Load tests with 4000-8000 Clients per second.

As you can see, the server handled the load pretty well, and after the initial spike the latency is always less than 250-500ms.

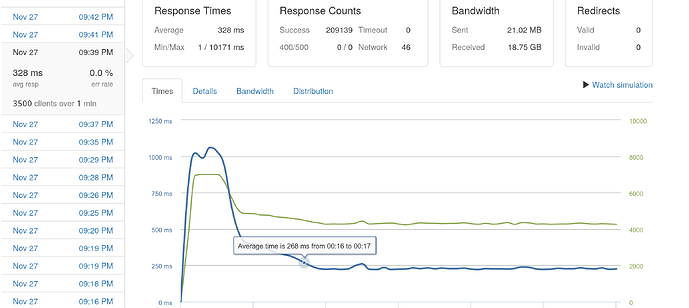

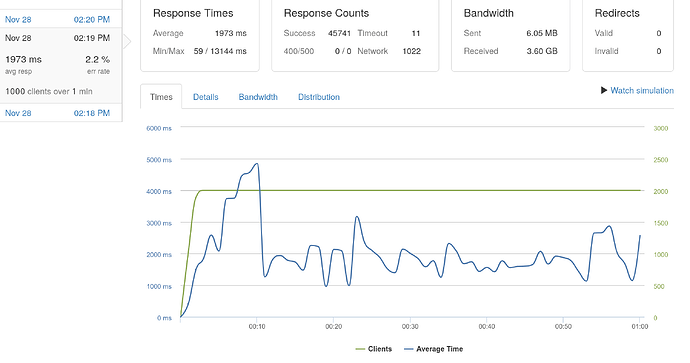

Hestia with Apache + php-fpm test

For the second test I installed Apache and php-fpm on Hestia using the installation args. Nginx was also present as a Reverse Proxy.I ran one test with 2000 clients per second.

Apache was already doing worse. the response time was going above 2 seconds at some point.

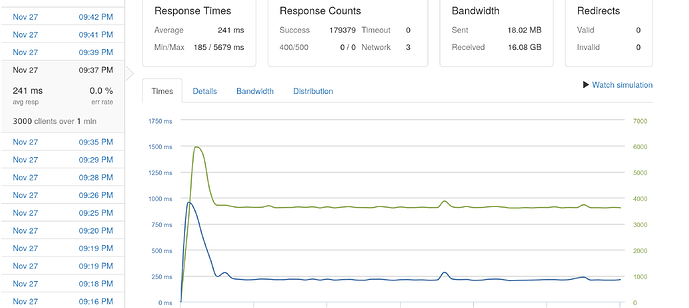

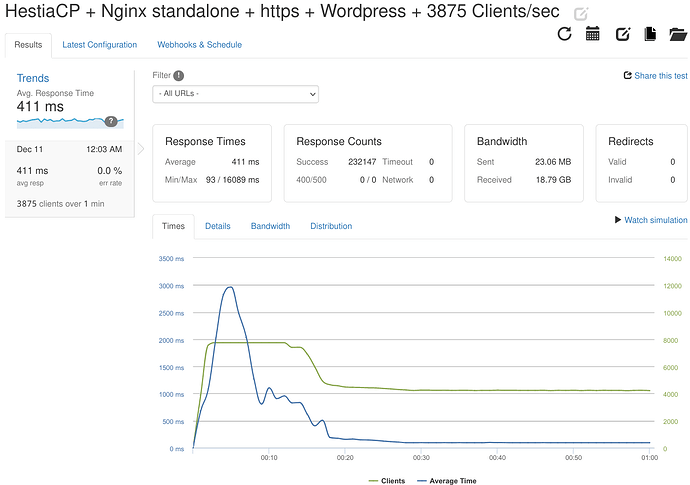

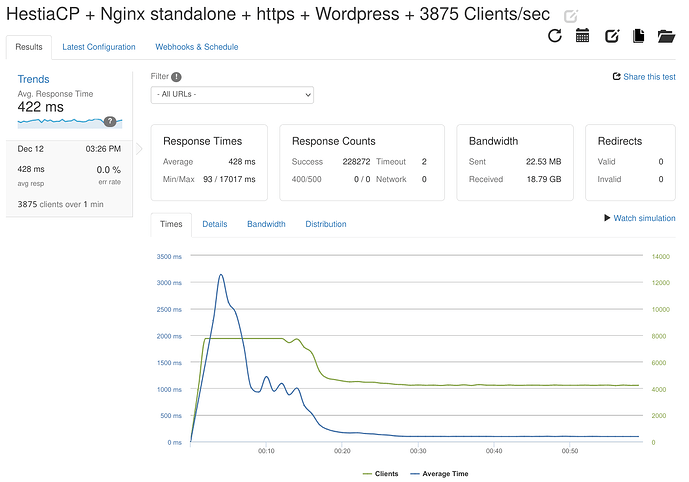

Hestia with Nginx + php-fpm test

For the last test, I ran Nginx as a web server. with PHP-fpm. No apache. I also enabled FastCGI cache from the settings. I ran a test with 2000 clients per second.

Nginx was holding better with about 1 second of response with 2000 clients.

Conclusion

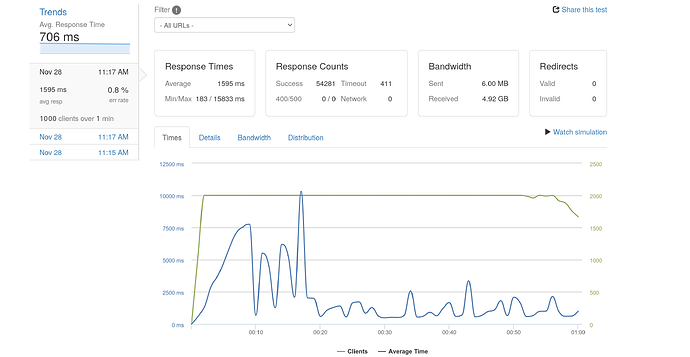

I am really torn after seen the results. the gaps are much wider than I ever expected and not in a good way. Upon more inspection and fiddling with Apachebench. I noticed that during the load with Hestia and Nginx, the CPU usage get’s to a full 100%. While memory usage increases by a % depending on the clients visiting per second. But to my surprise while the memory increase was similar with Openlitespeed. The CPU didn’t even budge during the load. I tried all these in vanilla settings. Was I doing anything wrong? Did I miss any obvious optimisation. Are my results expected? I want your opinions.

I am running my production servers cyberpanel, but its utterly broken. I would like to move to Hestia, However if the load capacity gets reduce by certain magnitude it can be an issue.